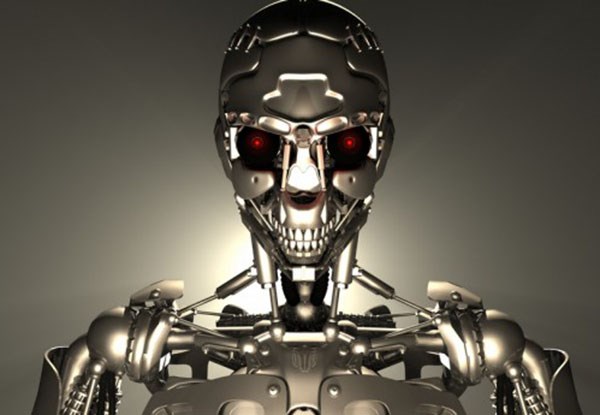

Does the world need lethal, autonomous robots that can engage targets without human intervention? Anyone who’s ever seen an episode of Star Trek or science fiction movies like The Terminator or The Matrix knows the answer is a resounding “no.” That’s because in every movie, television show or comic book that has ever depicted a so-called “killer robot,” the thing inevitably goes bat-crap haywire and decides its new fun directive is to “Kill All Humans.”

As a human, I feel this is totally a drawback to the technology.

Of course, in the Star Trek universe, the rogue robot or killer computer is always talked into self-destructing by Capt. Kirk’s convoluted logic; however, what’s the world to do if William Shatner is busy shooting a Priceline commercial or T.J. Hooker reunion show when it all goes down?

That’s probably why members of the United Nations (UN) met this month in Geneva to discuss the subject of Terminator-type tech running amok.

The event is called the Convention on Certain Conventional Weapons (CCW) Meeting of Experts on Lethal Autonomous Weapons Systems. Sure, I guess that’s a more official-sounding title than the “Crazy Killer Robot Conference,” but to each his own.

The CCW, consisting of 117 states — including the world’s major powers — exists to ban or limit the use of “weapons that kill civilians indiscriminately or cause unnecessary pain,” such as land mines and lasers designed to blind people.

The group will try to come to a consensus on the definition of an autonomous weapon, which may be anything from an autonomous, human-like robot to fully autonomous drones, then discuss legal and ethical questions behind the use of such weapons.

On one side of the argument is the fear that deadly robots may not consistently obey humanitarian laws, particularly in tricky situations; and they may do things that are logically sound from a computer’s point of view, but morally flawed from a human-who-just-got-vaporized point of view. On the other side are experts who think movies have made us all paranoid.

Those guys are probably robots, though.

It’s a weird debate, and one made even more so by the fact that the technology doesn’t even exist yet.

We do have semi-autonomous machines — namely drones — already being used in conflicts and as we’ve seen in news reports killing both the “bad guys” as well as innocent civilians. How come I never heard of a UN “Convention on Preventing Killer Humans from Accidentally Targeting the Wrong People?”

They’re worried about out-of-control robots when, looking at history, we seem to be pretty machine-like in our ability to efficiently kill humans ourselves.

But, as with the blinding laser weapons, which the CCW banned even before the tech was a reality, they say they hope to establish some guidelines before it’s too late.

Meanwhile, in another news story this month I read the U.S. military is seeking to build a robot capable of moral reasoning with a $7.5 million grant from the Office of Naval Research.

Let’s just hope someone is keeping William Shatner on standby.